Symbody

2024

Research project

Symbody is an artistic research project that explores how artificial intelligence can study, interpret, and interact with human body movement. Led by visual artist Natan Sinigaglia, the project investigates new ways to capture and visualize the subtle qualities of movement through machine learning and real-time graphics.

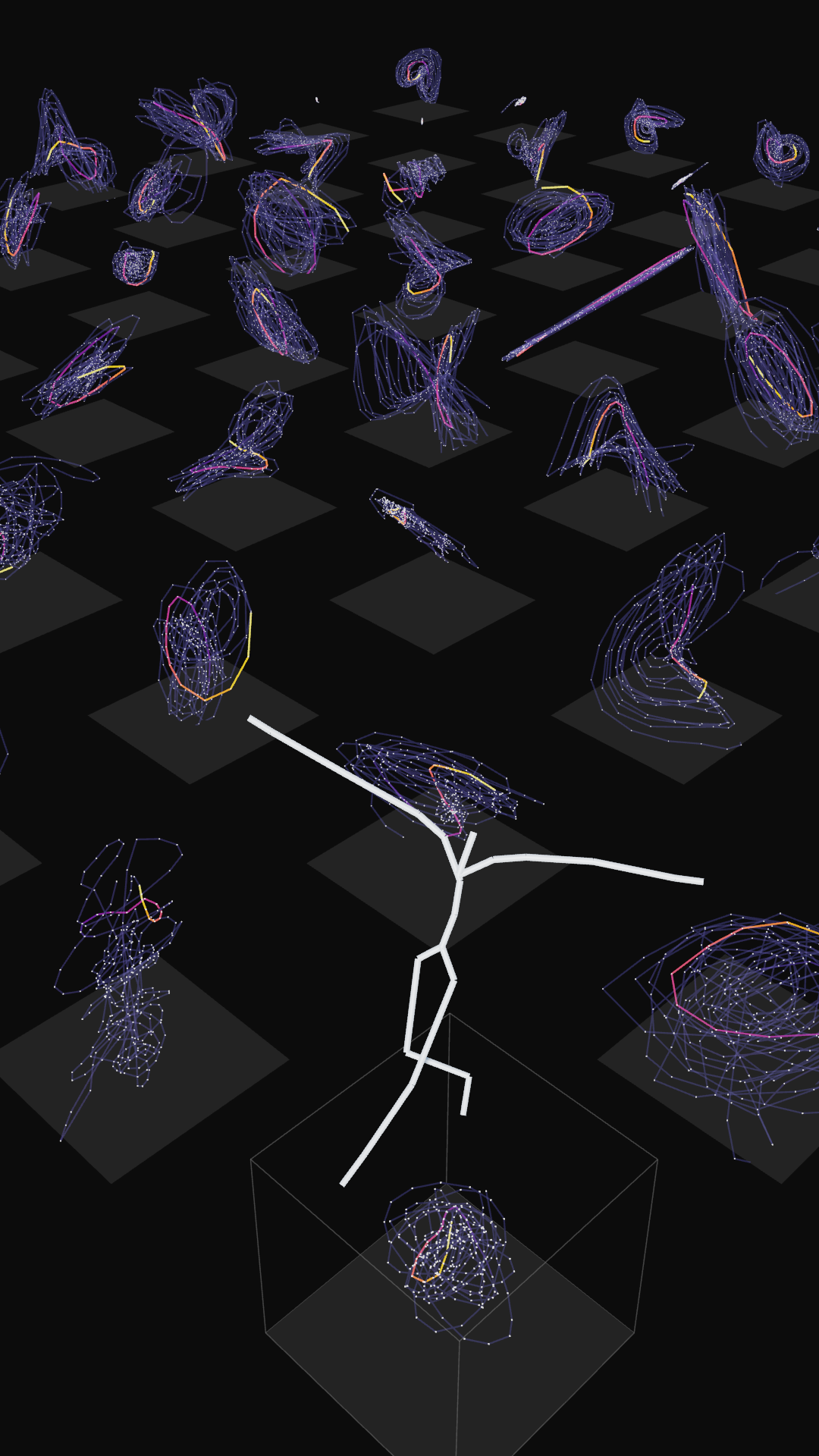

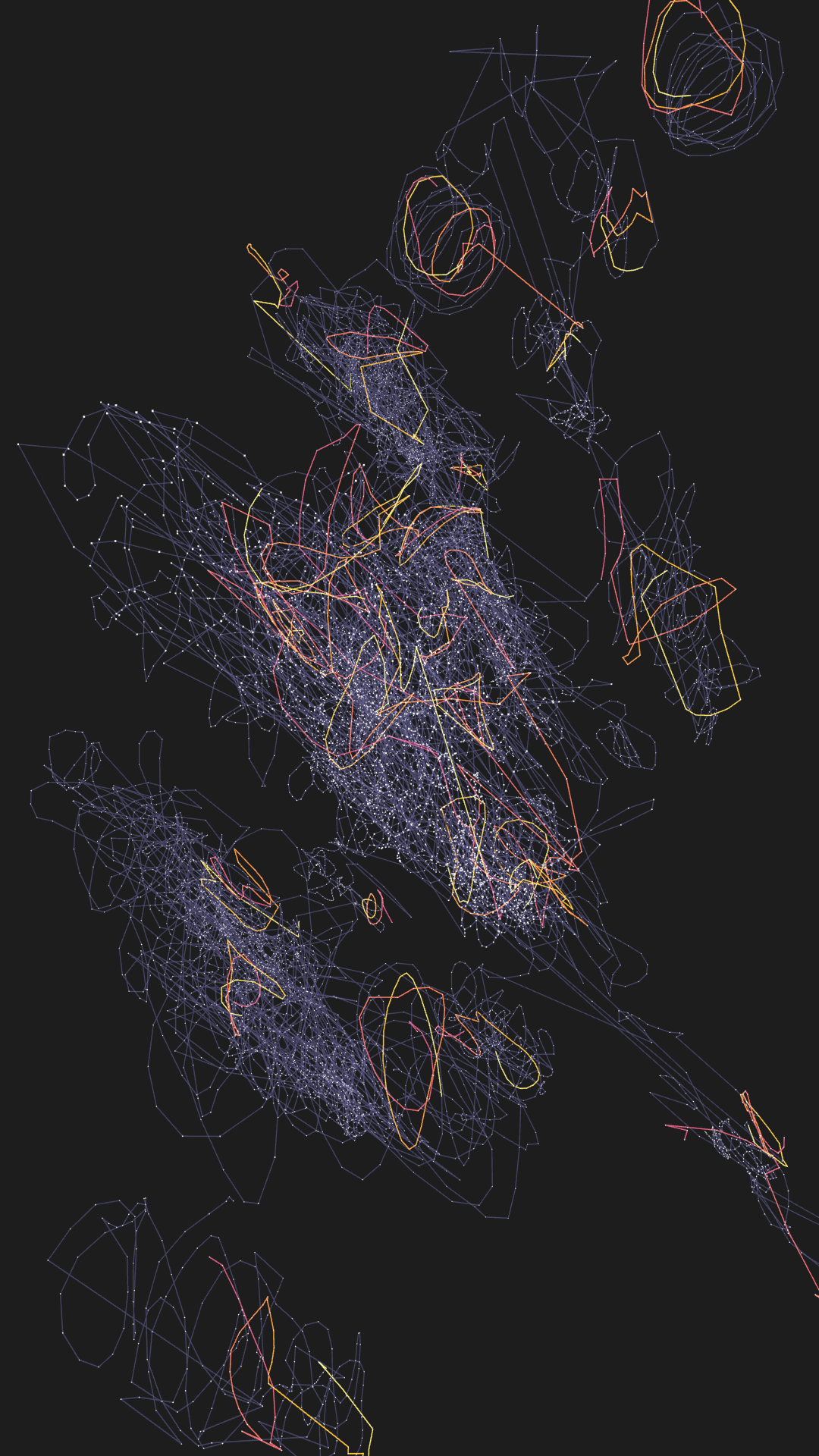

At its core, Symbody functions as an intermediary between human movement and machine perception. The project uses advanced AI technology called auto-encoders, which work like sophisticated pattern recognition systems, to capture movement qualities in ways that traditional motion capture cannot. Rather than just tracking where a body moves in space, Symbody aims to understand and visualize how it moves – revealing patterns and expressions that might escape human perception.

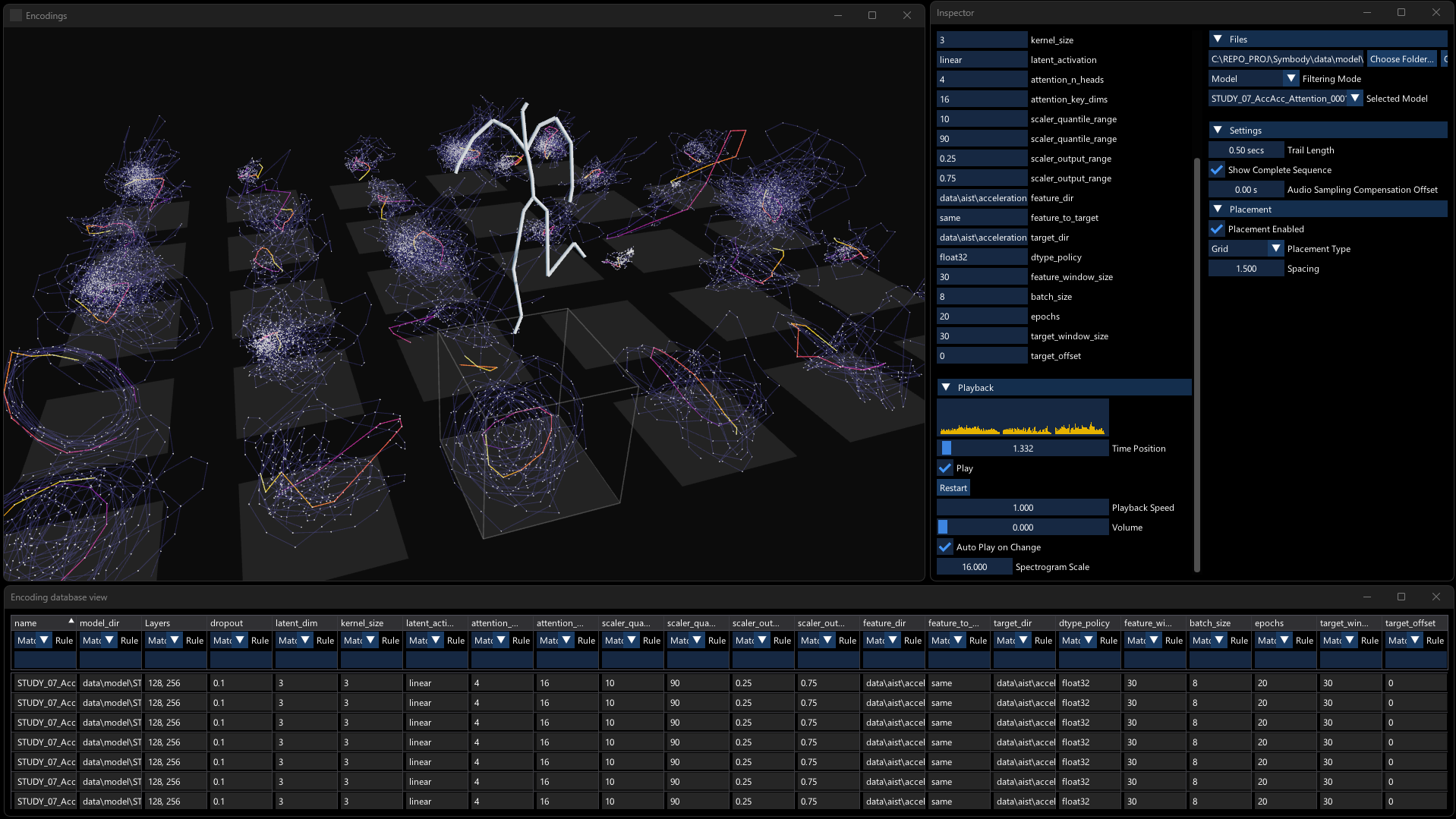

Through the development process, several key technical components emerged: a customizable machine learning pipeline, a model database visualization tool, and a prototype for real-time interactive installation. These tools serve as critical intermediaries between raw movement data, machine processing, and human perception. The resulting prototype software allows users to explore latent space representations of movement data, enabling new ways of analyzing and understanding human motion. The system processes movement data in real-time, creating immediate visual representations that reveal hidden qualities of motion. These visualizations offer a new perspective for understanding human physicality and expression through the lens of artificial intelligence.

The project’s current prototype phase shows promising potential for various fields. In healthcare, it could contribute to early detection of movement disorders. Sports professionals might use it for detailed performance analysis, while choreographers and dancers could explore new dimensions of movement creation.

Through collaboration with TMC, In4Art, HEKA Lab, and the Institute of Artificial Intelligence Serbia, Symbody has evolved from an artistic concept into an experimental platform that bridges human intuition and machine perception. The project demonstrates how artificial intelligence can enhance our understanding of human expression, suggesting new possibilities for both artistic exploration and practical applications in movement analysis.

Credits

Artist and Programmer: Natan Sinigaglia

Realtime graphics custom software developed in vvvv

Machine-Learning development: TMC

Kanthavel Pasupathipillai (TMC)

Celeste Dipasquale (TMC)

Sound sysnthesis and spatialization: Pina and HEKA lab, Koper

Mentors:

Lija Groenewoud van Vliet and Rodolfo Groenewoud (In4Art)

Dejan Mircetic (Institute of Artificial Intelligence Serbia)

Fernando Cucchietti (BSC)

Vincenzo Cutrona (SUPSI)

Sergio Escalara (CVC)

Aknowledgements:

Michael Lin (Complex Objects)

The project was funded through the S+T+ARTS AIR project, co-funded by In4Art and the European Union from call CNECT/2022/3482066 – Art and the digital: Unleashing creativity for European industry, regions, and society under grant agreement LC-01984767.